Integrating Legacy Network Maps into Remote Survey Workflows

Legacy network maps often exist as paper drawings, PDFs, or fragmented digital files that were never designed for remote survey workflows. Converting these resources into usable inputs requires systematic digitization, georeferencing, and metadata enrichment so that remote teams can overlay sensors, interpret subsurface features, and schedule targeted inspections with confidence.

How does legacy pipeline mapping work?

Converting legacy pipeline maps into digital, remote-ready formats starts with inventorying available assets and assessing map fidelity. Paper plans and scanned PDFs must be georeferenced against modern coordinate systems and reconciled with known control points. During this process, mapping should capture attribute data such as pipe material, diameter, installation date, and subsurface depth estimates. Accurate mapping reduces uncertainty for remote survey teams by providing context for sensor placement, enabling correlation between historical records and live monitoring feeds, and supporting downstream analytics that account for subsurface variability.

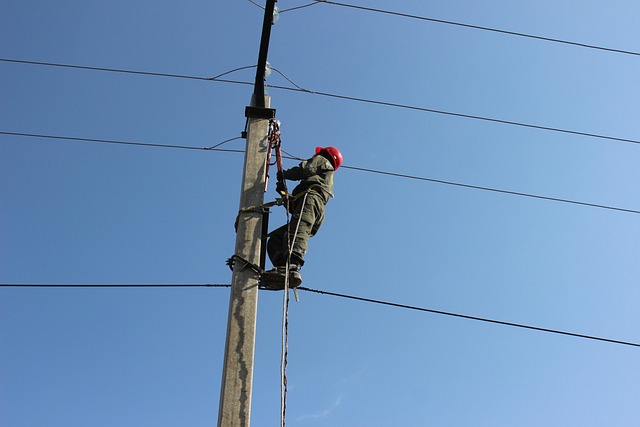

What sensors suit remote monitoring?

Selecting sensors for remote surveys depends on the detection objectives and site constraints. Acoustic sensors and fiber-optic cables can detect transient noise events along a pipeline, while pressure transducers and flow meters provide continuous hydraulic signals. Thermography cameras mounted on drones or fixed platforms detect thermal anomalies associated with leaks or soil moisture changes. Integrating these sensors with telemetry and edge processing allows remote teams to monitor conditions in near real time and to trigger targeted inspections when thresholds are exceeded.

Can acoustics and thermography aid detection?

Acoustics and thermography are complementary modalities for leak identification. Acoustic methods capture sound signatures generated by escaping fluid and are effective for pressurized systems when ambient noise is manageable. Thermography measures surface temperature differences that can indicate fluid migration or changes in soil moisture—useful for both aboveground and shallow subsurface leaks. Both techniques require careful interpretation: acoustics may produce false positives from traffic or machinery, and thermography’s effectiveness varies with surface insulation, weather, and time of day.

How to handle pressure, calibration, validation?

Pressure monitoring is a primary indicator of system integrity but must be paired with rigorous calibration and validation procedures. Sensors should be calibrated against traceable standards and tested periodically to ensure drift is detected and corrected. Validation workflows combine remote sensor alerts with historical patterns, simulated leak scenarios, and selective field checks to confirm findings. Calibration records and validation outcomes should be logged in the mapping system so teams can weight data quality during triage and analytics.

How does triage and analytics prioritize anomalies?

Analytics help triage large volumes of sensor data by scoring anomalies based on severity, location, and historical context. Algorithms can correlate acoustic spikes, pressure drops, and thermal anomalies to reduce false positives and to prioritize events that warrant immediate attention. Triage rules should include metadata from legacy maps—such as suspected subsurface obstacles or sensitive receptors—to guide response. Human-in-the-loop validation remains essential: automated analytics can rank incidents, but experienced analysts must interpret ambiguous signatures and decide whether remote diagnostics or field intervention is required.

Integrating subsurface data with remote mapping

Incorporating subsurface information improves the accuracy of remote surveys. Subsurface models—derived from historical maps, borehole logs, or ground-penetrating radar—help predict where leaks might surface or how a leak signature will attenuate. When integrating subsurface layers into a remote mapping platform, preserve provenance and uncertainty metrics so that analysts understand confidence levels. Cross-referencing subsurface data with sensor footprints enables optimized sensor placement, better calibration of expected signal levels, and more effective validation strategies for suspected anomalies.

Conclusion

Bridging legacy network maps with contemporary remote survey workflows combines careful digitization, sensible sensor selection, disciplined calibration, and data-driven analytics. Maintaining clear metadata, tracking validation steps, and embedding subsurface context into mapping platforms improves detection reliability and helps teams prioritize resources. A structured approach ensures that historical records become a practical asset for ongoing monitoring, triage, and decision-making without overstating capabilities or replacing necessary field verification.